Incident Responders often find themselves investigating computer incidents for clients who may not have the best security posture – lack of centralized SIEM logging, mature EDR deployment and general inability to centrally query or otherwise collect data from across the network.

This is a real problem when investigating – it means you can’t rapidly pivot on Indicators of Compromise (IOCs) such as IP addresses/Ports, Process information (names, commandlines, etc), User activity, Scheduled Task or Service metadata such as known-bad names or binaries and other system information. Without centralized tooling or logging, using IOCs or Indicators of Attack (IOAs) can be extremely difficult.

I’ve previously made some scripts to aid my own objectives to solve this problem such as WMIHunter (https://github.com/joeavanzato/WMIHunter/tree/main) and variations of using WMI at-scale in C# and Go respectively – but recently I wanted to revisit this problem and make a more modular and flexible solution.

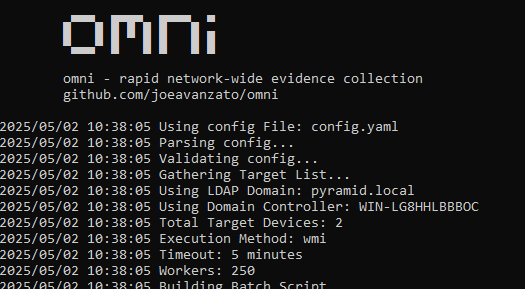

I’d like to introduce a tool I wrote aimed at solving this problem and providing DFIR professionals another open-source solution – hence, omni [https://github.com/joeavanzato/omni].

At its core, omni is an orchestration utility providing analysts the means to execute commands on hundreds or thousands of remote devices simultaneously and transparently collect and aggregate the output. This means any command, script, tool or anything else that you as an analyst want to execute and collect some type of output from, omni helps make that easy to achieve at-scale.

Can omni help you?

Ask yourself these questions – if the answer to any of these is ‘yes’, omni can help you.

- Do you have a need to execute and collect the results of one or more commands/scripts/tools on multiple devices concurrently?

- Do you need to collect data from a large amount of devices that are not connected to the internet?

- Have you ever run into issues trying to rapidly pivot on indicators of compromise across a large number of devices due to lack of data/logging/agents?

- Does the current environment lack a centralized logging solution or EDR that can help you quickly query devices?

- Do you need to execute a series of triage scripts on 1 or more networked devices?

As an example, let’s consider running processes and TCP connections – both are extremely common to collect to aid reactive hunts on known-bad during an engagement. omni works by allowing users to build a YAML configuration file containing command directives to be executed on targets – we can add, subtract or modify from this file as needed to serve any type of unique requirements. Below is an example of one way you could capture this data with omni:

command: powershell.exe -Command "Get-WmiObject -Class Win32_Process -Locale MS_409 -ErrorAction SilentlyContinue | Select PSComputerName,ProcessName,Handles,Path,Caption,CommandLine,CreationDate,Description,ExecutablePath,ExecutionState,Handle,InstallDate,Name,OSName,ProcessId,ParentProcessId,Priority,SessionId,Status,TerminationDate | Export-Csv -Path '$FILENAME$' -NoTypeInformation"

file_name: $time$_processes.csv

merge: csv

id: processes

tags: [quick, process, processes, builtin]The above configuration tells omni to run the PowerShell command, automatically replacing any placeholder variables with the specified file-name – then omni knows that once collection is done, this file-name should be collected from the targets.

It is also possible to copy a script to the target and execute this, allowing omni to facilitate analysts with running more complex triage tools remotely.

command: powershell.exe C:\Windows\temp\ExtractLogons.ps1 -DaysBack 14 -OutputFile $FILENAME$

file_name: $time$_LogonActivity.csv

merge: csv

id: LogonActivity

tags: [access, user, builtin]

dependencies: [utilities\ExtractLogons.ps1]The dependencies block allows users to specify one or more files or directories that this directive requires exist on the target prior to execution – dependencies are always copied into a single directory (C:\Windows\Temp) and then removed once execution is complete. Dependencies can also specify an http file that will be retrieved during parsing configuration.

dependencies:[https://raw.githubusercontent.com/joeavanzato/Trawler/refs/heads/main/trawler.ps1]Typically though, if your configuration requires some remote files for download, you will be better off using the Preparation section of the configuration – this allows for commands to be executed for preparing the analysis environment – usually this means downloading any necessary tools that you want to deploy to targets, such as Autoruns or the Eric Zimmerman parsing toolset.

preparations:

- command: powershell.exe -Command "iex ((New-Object System.Net.WebClient).DownloadString('https://raw.githubusercontent.com/EricZimmerman/Get-ZimmermanTools/refs/heads/master/Get-ZimmermanTools.ps1'))"

note: Download and execute Get-ZimmermanTools into current working directory

- command: powershell.exe -Command "iwr -Uri 'https://download.sysinternals.com/files/Autoruns.zip' -OutFile .\Autoruns.zip ; Expand-Archive -Path Autoruns.zip -Force"

note: Download and unzip AutorunsThis can be used to help ensure that required dependencies exist prior to executing your configuration.

When omni runs, it will create two folders – ‘devices‘ and ‘aggregated‘ – inside devices a directory is created for each target device that will contain all data collected for that target. Aggregated will store any merged files once collection is complete depending on configuration settings – for example, all running processes for all computers if using the first config specified in this post.

Keep in mind – omni is designed to facilitate rapid and light-weight network-wide hunting – although it is of course possible to execute and collect any type of evidence – for example, launching KAPE remotely and collecting the subsequent zips from specified targets, like below:

command: C:\windows\temp\kape\kape.exe --tsource C --tdest C:\Windows\temp\kape\machine\ --tflush --target !SANS_Triage --zip kape && powershell.exe -Command "$kapezip = Get-ChildItem -Path C:\Windows\temp\kape\machine\*.zip; Rename-Item -Path $kapezip.FullName -NewName '$FILENAME$'"

file_name: $time$_kape.zip

merge: pool

id: kape

add_hostname: True

dependencies: [KAPE]Of course, doing this across thousands of devices would results in a massive amount of data, but for a more limited scope this could be a highly effective means of collecting evidence – choose your configurations and targets carefully.

Below are some common command-line examples for launching omni:

omni.exe -tags builtin

- Launch omni with all targets from .\config.yaml having tag 'builtin' with default timeout (15) and worker (250) settings, using Scheduled Tasks for execution and querying AD for enabled computers to use as targets

omni.exe -workers 500 -timeout 30 -tags quick,process

- Add more workers, increase the timeout duration per-target and only use configurations with the specified tags

omni.exe -targets hostname1,hostname2,hostname3

omni.exe -targets targets.txt

- Use the specified computer targets from command-line or file

omni.exe -method wmi

- Deploy omni using WMI instead of Scheduled Tasks for remote execution

omni.exe -config configs\test.yaml

- Execute a specific named configuration fileUltimately, you can use omni to launch any type of script, command or software remotely at-scale on any number of targets. I’ve often found myself on engagements for clients who lack effective SIEM or EDR tooling, meaning that when we find something like a known-bad IP address, Process Name, Binary Path, Service/Task Name or some other IOC, we have no way to effectively hunt this across the network.

omni comes with a pre-built configuration file that contains directives for common situations such as collecting running processes, TCP connections, installed Services/Tasks, etc (https://github.com/joeavanzato/omni/blob/main/config.yaml). Prior to use, you should customize a configuration that meets your collection needs depending on the situation at hand. omni also includes some example configuration files for specific use-cases at https://github.com/joeavanzato/omni/configs.

Please consider omni during your next engagement in a low-posture network suffering a cyber incident. If you experience any bugs, issues or have any questions, please open an Issue on GitHub. I am eager to hear about feature requests, ideas or problems with the software.